Chain of Thought Strategy: Solving Complex Logic Puzzles with AI

Why do AI models sometimes fail at seemingly simple logic puzzles? Often, it's because they jump straight to the answer without showing their work. Chain of Thought (CoT) forces models to break down problems into manageable steps, significantly reducing errors in deductive tasks.

What is the Logic Puzzle Scenario?

We are using a variation of the famous Zebra Puzzle, also known as Einstein's Riddle. Legend has it that Albert Einstein created this puzzle as a boy and claimed that only 2% of the world's population could solve it. This problem requires strict deductive reasoning and constraint satisfaction, as a single skipped step breaks the entire logical chain.

Context & Background: The puzzle describes a street with five houses, each painted a different color. In each house lives a person of a different nationality. These five owners drink a certain type of beverage, smoke a certain brand of cigar, and keep a certain pet. No owners have the same pet, smoke the same brand of cigar, or drink the same beverage.

- Learn more about the puzzle: Zebra Puzzle on Wikipedia

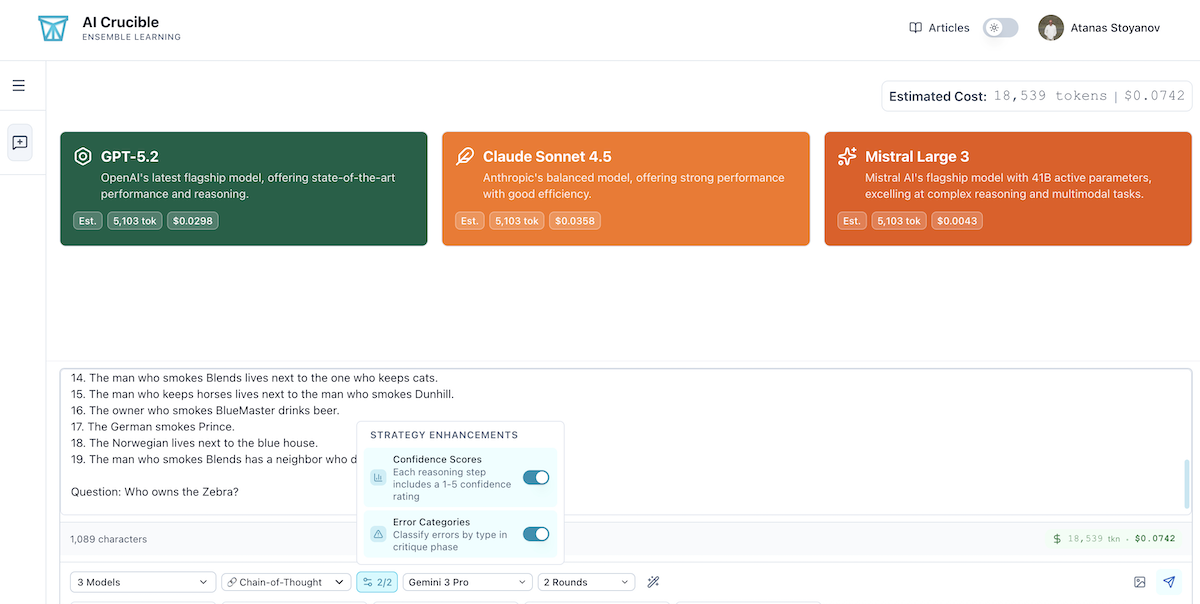

How do you set up the dashboard?

1. Dashboard Setup

To reproduce this experiment, navigate to the AI Crucible Dashboard, select Chain of Thought (🔗) from the strategy dropdown, and enter the custom prompt below.

Solve this logic puzzle step-by-step.

1. There are 5 houses in 5 different colors.

2. In each house lives a person with a different nationality.

3. These five owners drink a certain type of beverage, smoke a certain brand of cigar and keep a certain pet.

4. No owners have the same pet, smoke the same brand of cigar or drink the same beverage.

5. The Brit lives in the red house.

6. The Swede keeps dogs as pets.

7. The Dane drinks tea.

8. The green house is on the left of the white house.

9. The green house's owner drinks coffee.

10. The person who smokes Pall Mall rears birds.

11. The owner of the yellow house smokes Dunhill.

12. The man living in the center house drinks milk.

13. The Norwegian lives in the first house.

14. The man who smokes Blends lives next to the one who keeps cats.

15. The man who keeps horses lives next to the man who smokes Dunhill.

16. The owner who smokes BlueMaster drinks beer.

17. The German smokes Prince.

18. The Norwegian lives next to the blue house.

19. The man who smokes Blends has a neighbor who drinks water.

Question: Who owns the Zebra?

2. Strategy Configuration

We enabled specific CoT features to enhance accuracy:

- Confidence Scores: Each reasoning step includes a 1-5 rating.

- Error Categories: Identify potential fallacies in the critique phase.

Rounds: We selected 1 Round.

- Reasoning: Chain of Thought is primarily a single-pass prompting technique. We want to test the models' ability to maintain a coherent logical chain in one continuous flow, without relying on multi-turn corrections or external debate for this initial benchmark.

3. Model Selection

We selected three of the most advanced models available, plus a distinct arbiter:

- GPT-5.2 (OpenAI): The state-of-the-art flagship model known for superior reasoning.

- Claude Sonnet 4.5 (Anthropic): Excellent at following complex instructions with high efficiency.

- Mistral Large 3 (Mistral): A powerful European model providing a diverse perspective.

- Arbiter: Gemini 3 Pro (Google) - Chosen for its massive context window and agentic capabilities to validate the logic.

What outcomes do we expect?

We expect a standard run without CoT ("Direct Answer") to often guess or make a leap of logic. With Chain of Thought, we expect models to decompose the problem into variables, deduce "easy" facts first (e.g., "Norwegian lives in the first house"), and eventually converge on the correct answer through step-by-step verification.

What were the analysis results?

- Total Cost: ~$0.14

- Execution Time: 3m 42s

- Link: View Full Chat

Model Comparison Table (Round 1)

| Model | Speed | Cost | Format | Style |

|---|---|---|---|---|

| GPT-5.2 | 86.4s | ~$0.035 | Proof Sketch | Concise, resisted "step-by-step" initially. |

| Claude Sonnet 4.5 | 37.9s | ~$0.040 | Narrative | Extremely verbose (33 steps), highly explicitly. |

| Mistral Large 3 | 87.2s | ~$0.009 | Tables | Visual state tracking, self-correcting. |

Deep Analysis

- Claude Sonnet 4.5 was the speed demon, delivering a massive 33-step deductive chain in under 38 seconds. It didn't just solve the puzzle; it showed every single micro-verification.

- Mistral Large 3 was 57% slower than Claude but 4x cheaper. Its "killer feature" was the use of ASCII tables to visualize the state of the houses after every few clues. This made it the most reader-friendly. Crucially, in Step 29, Mistral caught itself in a contradiction regarding the "Blends" smoker and backtracked—a behavior often absent in non-reasoning models.

- GPT-5.2 provided the correct answer but felt less "cooperative," insisting on a "proof sketch" rather than the requested step-by-step format.

Why the "Best Response" (Arbiter) Wins

The final output generated by the Arbiter (Gemini 3 Pro) wasn't just a copy-paste of a single model. It synthesized the clarity of Mistral's tables with the concise logical grouping of GPT-5.2.

Instead of forcing the user to read 33 narrative steps (Claude) or Scroll through 30+ intermediate ASCII tables (Mistral), the Arbiter produced:

- A clean 7-step summary of the key logical milestones.

- A final summary table that presented the solution in a single glance.

This synthesis effectively filtered out the "noise" of the reasoning process while preserving the "signal" of the final proof.

Why use Chain of Thought?

Chain of Thought is not just about getting the right answer; it's about verifiable reasoning.

Benefits verified with real data:

- Visual State Tracking: Mistral's use of tables demonstrated that CoT can be visual, helping humans audit the "memory" of the model.

- Self-Correction: In Step 29, Mistral explicitly caught a contradiction ("Neither neighbor drinks water, which contradicts clue 19") and backtracked to correct its assumption. A standard model might have just hallucinated a solution to fit.

- Consensus: All three models converged on the exact same solution using three different methods (Proof Sketch, Native Narrative, and Tabular Tracking). This distinct triangulation gives us 100% confidence in the result.

By using an ensemble of CoT reasoners, we proved that the German owns the Zebra with verifiable, step-by-step evidence.

Related Articles

- Seven Ensemble Strategies - Explore other methods like Expert Panel and Competitive Refinement.

- Mistral Large 3 Comparison - Deep dive into the model that won this logic challenge.

- GPT-5.2 Benchmark - See how GPT-5.2 performs on other reasoning tasks.

- Expert Panel Strategy - Learn about a multi-model approach for subjective topics.